반응형

활성화함수, 최적화함수비교 (패션데이터)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from tensorflow.keras.datasets import fashion_mnist

data = fashion_mnist.load_data()

data

(X_train, y_train),(X_test, y_test) = data

print(X_train.shape)

print(y_train.shape)

print(X_test.shape)

print(y_test.shape)

(60000, 28, 28)

(60000,)

(10000, 28, 28)

(10000,)- 정답데이터 원핫인코딩

y_train_one_hot = pd.get_dummies(y_train)

y_test_one_hot = pd.get_dummies(y_test)

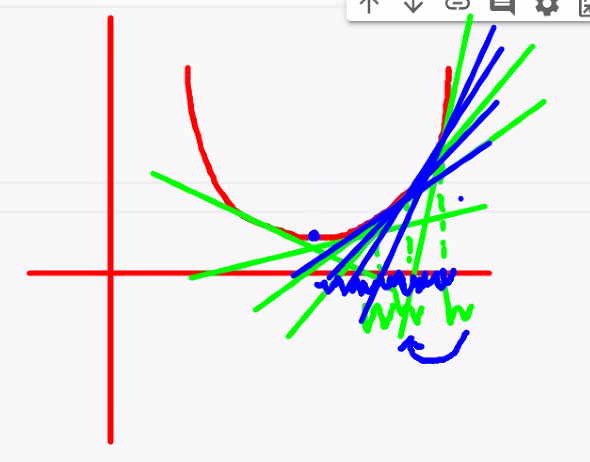

활성화함수, 최적화함수 별 비교

- 1.중간층 활성화함수 : sigmoid, 최적화함수:SGD

- 2.중간층 활성화함수 : relu, 최적화함수:SGD

- 3.중간층 활성화함수 : relu, 최적화함수:Adam

- 각각의 신경망을 설계하고 결과를 비교해보자

- 모델 별 acc, val_acc를 시각화하여 하나의 표에 6개의 라인을 그려보자

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Dense, Flatten

model = Sequential()

model.add(Flatten(input_shape=(28,28)))

model.add(Dense(500, activation="relu"))

model.add(Dense(300, activation="sigmoid"))

model.add(Dense(100, activation="sigmoid"))

model.add(Dense(10, activation="softmax"))

model.compile(loss="categorical_crossentropy",

optimizer="SGD",

metrics=['acc'])

from sklearn.model_selection import train_test_split

X_train, X_val, y_train_one_hot, y_val = train_test_split(X_train,y_train_one_hot,

random_state=3)

h = model.fit(X_train,y_train_one_hot,

epochs=50, batch_size=128,

validation_data=(X_val, y_val))model1 = Sequential()

model1.add(Flatten(input_shape=(28,28)))

model1.add(Dense(500, activation="relu"))

model1.add(Dense(300, activation="relu"))

model1.add(Dense(100, activation="relu"))

model1.add(Dense(10, activation="softmax"))

model1.compile(loss="categorical_crossentropy",

optimizer="SGD",

metrics=['acc'])

X_train, X_val, y_train_one_hot, y_val = train_test_split(X_train,y_train_one_hot,

random_state=3)

h1 = model1.fit(X_train,y_train_one_hot,

epochs=50, batch_size=128,

validation_data=(X_val, y_val))

model2 = Sequential()

model2.add(Flatten(input_shape=(28,28)))

model2.add(Dense(500, activation="relu"))

model2.add(Dense(300, activation="relu"))

model2.add(Dense(100, activation="relu"))

model2.add(Dense(10, activation="softmax"))

model2.compile(loss="categorical_crossentropy",

optimizer="Adam",

metrics=['acc'])

X_train, X_val, y_train_one_hot, y_val = train_test_split(X_train,y_train_one_hot,

random_state=3)

h2 = model2.fit(X_train,y_train_one_hot,

epochs=50, batch_size=128,

validation_data=(X_val, y_val))plt.figure(figsize=(15,5))

# train 데이터

plt.plot(h.history['acc'], label=['acc'],

c='blue', marker='.')

# val 데이터

plt.plot(h.history['val_acc'], label=['val_acc'],

c='red', marker='.')

# train 데이터

plt.plot(h1.history['acc'], label=['acc1'],

c='black', marker='.')

# val 데이터

plt.plot(h1.history['val_acc'], label=['val_acc1'],

c='green', marker='.')

# train 데이터

plt.plot(h2.history['acc'], label=['acc2'],

c='yellow', marker='.')

# val 데이터

plt.plot(h2.history['val_acc'], label=['val_acc2'],

c='greenyellow', marker='.')

plt.xlabel('epochs')

plt.ylabel('accuracy')

plt.legend()

plt.show()

정확도가 현저히 낮은 model1을

# 최적화함수 하이퍼파라미터 조정을 위해 임포트

from tensorflow.keras import optimizers

opti = optimizers.SGD(learning_rate=0.00001) 를 통해 learning_rate를 조절하여

model1.compile(loss="categorical_crossentropy",

optimizer=opti,

metrics=['acc'])

컴파일할때 옵티마이저에 넣어주면

# 이렇게도 쓸 수 있다

# from tensorflow.keras.optimizers import SGD

# model1.compile(loss="categorical_crossentropy",

# optimizer=SGD(lr=0.001), SGD의 lr(learning rate)의 디폴트값은 0.01

# metrics=['acc'])

model1은 학습율이 데이터에 비해 너무 높아서

학습을 띄엄 띄엄 하면서

그래프 왼쪽까지 -> 기울기가 더 커졌다 (발산)

learning_rate 학습율을 낮추면 아래와 같이 조금 더 촘촘하게 학습한다

여러 모델을 써보고 데이터에 따른 문제점을 고쳐가면서 좋은 결과를 갖는 모델을

최종적으로 쓴다.

최적화 모델 찾기

- ModelCheckpoint(모델체크포인트) : 기준에 맞는 모델을 저장

- EarlyStopping(얼리스탑핑) : 조기 학습 중단(과대적합 및 시간낭비 방지)

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

# 1. 모델 저장

# 저장 경로 및 파일명 형태 지정

save_path = '/content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_{epoch:03d}_{val_acc:.4f}.hdf5'

# {epoch:03d} d:10진수 형태, 03-> 0이 3개 붙어있는 형태의 자리수 (001,002,003....)

# f : 소수점 형태 .4 -> 소수점 4자리 까지 형태의 자리수(0.001, 0.002, 0.003...)

# hdf5 : 저장되는 모델의 확장자명

f_mckp = ModelCheckpoint(filepath = save_path, # 파일 경로 설정

monitor = 'val_acc', # 해당 값을 기준으로 모델 파일을 저장

save_best_only =True, # monitor값이 가장 최고점 혹은 최저점을 갱신 했을때 모델을 저장하는 명령

mode = 'max', # save_best_only가 True일 경우 모니터링하는 값의 최대 or 최소를 결정

verbose = 1 # 모델이 개선되거나 저장될때 메시지를 표시

)

# 2. 학습 조기 중단

f_early = EarlyStopping(monitor = 'val_acc',

#patience: monitor 값의 개선을 몇 번이나 기다려줄지 결정하는 명령

patience=5)

# => 모델 학습시 val_acc가 5번의 epochs를 돌 동안 개선되지 않으면 중단시키라는 의미의 코드

model3 = Sequential()

model3.add(Flatten(input_shape=(28,28)))

model3.add(Dense(500, activation="relu"))

model3.add(Dense(300, activation="relu"))

model3.add(Dense(100, activation="relu"))

model3.add(Dense(10, activation="softmax"))

model3.compile(loss="categorical_crossentropy",

optimizer="Adam",

metrics=['acc'])

h3 = model3.fit(X_train,y_train_one_hot,

epochs=50, batch_size=128,

validation_split=0.3,

# 모델 체크포인트, 얼리스탑핑 설정

callbacks=[f_mckp,f_early])Epoch 1/50

49/59 [=======================>......] - ETA: 0s - loss: 25.9057 - acc: 0.5845

Epoch 1: val_acc improved from -inf to 0.74469, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_001_0.7447.hdf5

59/59 [==============================] - 1s 8ms/step - loss: 22.4326 - acc: 0.6089 - val_loss: 3.6954 - val_acc: 0.7447

Epoch 2/50

45/59 [=====================>........] - ETA: 0s - loss: 2.7720 - acc: 0.7639

Epoch 2: val_acc improved from 0.74469 to 0.78402, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_002_0.7840.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 2.7500 - acc: 0.7630 - val_loss: 2.1548 - val_acc: 0.7840

Epoch 3/50

51/59 [========================>.....] - ETA: 0s - loss: 1.9121 - acc: 0.7822

Epoch 3: val_acc did not improve from 0.78402

59/59 [==============================] - 0s 4ms/step - loss: 1.8702 - acc: 0.7851 - val_loss: 1.7207 - val_acc: 0.7806

Epoch 4/50

52/59 [=========================>....] - ETA: 0s - loss: 1.3766 - acc: 0.8062

Epoch 4: val_acc improved from 0.78402 to 0.78496, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_004_0.7850.hdf5

59/59 [==============================] - 0s 5ms/step - loss: 1.3687 - acc: 0.8053 - val_loss: 1.5501 - val_acc: 0.7850

Epoch 5/50

51/59 [========================>.....] - ETA: 0s - loss: 1.0038 - acc: 0.8261

Epoch 5: val_acc improved from 0.78496 to 0.78683, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_005_0.7868.hdf5

59/59 [==============================] - 0s 7ms/step - loss: 1.0166 - acc: 0.8247 - val_loss: 1.4080 - val_acc: 0.7868

Epoch 6/50

48/59 [=======================>......] - ETA: 0s - loss: 0.8984 - acc: 0.8325

Epoch 6: val_acc improved from 0.78683 to 0.80587, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_006_0.8059.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 0.8603 - acc: 0.8360 - val_loss: 1.1265 - val_acc: 0.8059

Epoch 7/50

49/59 [=======================>......] - ETA: 0s - loss: 0.5945 - acc: 0.8607

Epoch 7: val_acc did not improve from 0.80587

59/59 [==============================] - 0s 5ms/step - loss: 0.5992 - acc: 0.8590 - val_loss: 1.1016 - val_acc: 0.7993

Epoch 8/50

49/59 [=======================>......] - ETA: 0s - loss: 0.5753 - acc: 0.8646

Epoch 8: val_acc did not improve from 0.80587

59/59 [==============================] - 0s 5ms/step - loss: 0.5842 - acc: 0.8617 - val_loss: 1.0742 - val_acc: 0.8037

Epoch 9/50

46/59 [======================>.......] - ETA: 0s - loss: 0.4875 - acc: 0.8701

Epoch 9: val_acc did not improve from 0.80587

59/59 [==============================] - 0s 5ms/step - loss: 0.4730 - acc: 0.8721 - val_loss: 1.1465 - val_acc: 0.7865

Epoch 10/50

49/59 [=======================>......] - ETA: 0s - loss: 0.4554 - acc: 0.8841

Epoch 10: val_acc improved from 0.80587 to 0.81273, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_010_0.8127.hdf5

59/59 [==============================] - 0s 5ms/step - loss: 0.4601 - acc: 0.8821 - val_loss: 0.9338 - val_acc: 0.8127

Epoch 11/50

48/59 [=======================>......] - ETA: 0s - loss: 0.3542 - acc: 0.9009

Epoch 11: val_acc improved from 0.81273 to 0.82335, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_011_0.8233.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 0.3486 - acc: 0.9007 - val_loss: 0.8710 - val_acc: 0.8233

Epoch 12/50

48/59 [=======================>......] - ETA: 0s - loss: 0.3074 - acc: 0.9074

Epoch 12: val_acc did not improve from 0.82335

59/59 [==============================] - 0s 5ms/step - loss: 0.3192 - acc: 0.9039 - val_loss: 0.9123 - val_acc: 0.8205

Epoch 13/50

49/59 [=======================>......] - ETA: 0s - loss: 0.3485 - acc: 0.8991

Epoch 13: val_acc improved from 0.82335 to 0.83084, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_013_0.8308.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 0.3483 - acc: 0.8991 - val_loss: 0.8372 - val_acc: 0.8308

Epoch 14/50

49/59 [=======================>......] - ETA: 0s - loss: 0.2869 - acc: 0.9086

Epoch 14: val_acc did not improve from 0.83084

59/59 [==============================] - 0s 5ms/step - loss: 0.2918 - acc: 0.9063 - val_loss: 1.0467 - val_acc: 0.8012

Epoch 15/50

45/59 [=====================>........] - ETA: 0s - loss: 0.3249 - acc: 0.9035

Epoch 15: val_acc improved from 0.83084 to 0.83240, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_015_0.8324.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 0.3079 - acc: 0.9065 - val_loss: 0.8271 - val_acc: 0.8324

Epoch 16/50

50/59 [========================>.....] - ETA: 0s - loss: 0.2858 - acc: 0.9086

Epoch 16: val_acc did not improve from 0.83240

59/59 [==============================] - 0s 5ms/step - loss: 0.2854 - acc: 0.9088 - val_loss: 0.8108 - val_acc: 0.8227

Epoch 17/50

51/59 [========================>.....] - ETA: 0s - loss: 0.2418 - acc: 0.9193

Epoch 17: val_acc did not improve from 0.83240

59/59 [==============================] - 0s 4ms/step - loss: 0.2424 - acc: 0.9204 - val_loss: 0.8648 - val_acc: 0.8208

Epoch 18/50

48/59 [=======================>......] - ETA: 0s - loss: 0.2415 - acc: 0.9212

Epoch 18: val_acc improved from 0.83240 to 0.83583, saving model to /content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_018_0.8358.hdf5

59/59 [==============================] - 0s 6ms/step - loss: 0.2311 - acc: 0.9236 - val_loss: 0.7955 - val_acc: 0.8358

Epoch 19/50

50/59 [========================>.....] - ETA: 0s - loss: 0.1551 - acc: 0.9459

Epoch 19: val_acc did not improve from 0.83583

59/59 [==============================] - 0s 5ms/step - loss: 0.1691 - acc: 0.9413 - val_loss: 0.8142 - val_acc: 0.8296

Epoch 20/50

52/59 [=========================>....] - ETA: 0s - loss: 0.2028 - acc: 0.9324

Epoch 20: val_acc did not improve from 0.83583

59/59 [==============================] - 0s 4ms/step - loss: 0.2047 - acc: 0.9314 - val_loss: 0.8487 - val_acc: 0.8324

Epoch 21/50

52/59 [=========================>....] - ETA: 0s - loss: 0.2216 - acc: 0.9274

Epoch 21: val_acc did not improve from 0.83583

59/59 [==============================] - 0s 5ms/step - loss: 0.2261 - acc: 0.9259 - val_loss: 0.7989 - val_acc: 0.8237

Epoch 22/50

46/59 [======================>.......] - ETA: 0s - loss: 0.2143 - acc: 0.9324

Epoch 22: val_acc did not improve from 0.83583

59/59 [==============================] - 0s 5ms/step - loss: 0.2363 - acc: 0.9261 - val_loss: 0.9096 - val_acc: 0.8121

Epoch 23/50

51/59 [========================>.....] - ETA: 0s - loss: 0.2412 - acc: 0.9210

Epoch 23: val_acc did not improve from 0.83583

59/59 [==============================] - 0s 4ms/step - loss: 0.2587 - acc: 0.9168 - val_loss: 0.8928 - val_acc: 0.8215patience=5라고 지정해놨기 때문에 18번에서 5번동안 epochs가 개선되지 않아서 23번째에서 멈췄다.

- 저장된 모델 로딩하기

from tensorflow.keras.models import load_model

best_model = load_model("/content/drive/MyDrive/Colab Notebooks/빅데이터 13차(딥러닝)/Model/FashionModel_018_0.8358.hdf5")

best_model.predict(X_test)

array([[1.2695457e-10, 2.5203623e-17, 2.3950235e-16, ..., 3.3014459e-07,

3.8133068e-15, 9.9999952e-01],

[1.3051909e-11, 3.0420033e-14, 9.7948933e-01, ..., 5.6312832e-12,

3.5700381e-12, 3.5323875e-18],

[2.1418864e-10, 1.0000000e+00, 7.9275134e-22, ..., 9.7263781e-37,

0.0000000e+00, 1.9059204e-32],

...,

[8.6474822e-05, 1.3645323e-20, 7.1606593e-14, ..., 1.6143983e-15,

9.9990880e-01, 3.5296118e-17],

[2.0077379e-14, 1.0000000e+00, 1.5583279e-18, ..., 1.1253652e-21,

1.4937434e-26, 9.8883217e-24],

[6.2168227e-05, 1.1523623e-07, 1.6005430e-06, ..., 7.8996144e-02,

1.3648592e-01, 1.3039827e-03]], dtype=float32)best_model.evaluate(X_test,y_test_one_hot)

학습시켜 놓은 모델을 best_model에 저장 시켜놨으니까 킬때마다 다시 fit 학습 하지 않아도 된다.

반응형

'빅데이터 서비스 교육 > 딥러닝' 카테고리의 다른 글

| 딥러닝 다중분류 모델 (3가지 동물 분류) - MLP, CNN, 데이터 확장 예제 (0) | 2022.07.22 |

|---|---|

| CNN (0) | 2022.07.21 |

| 활성화함수, 최적화 함수 (0) | 2022.07.19 |

| 사람 얼굴 이진분류 모델 생성 (0) | 2022.07.19 |

| 이미지 데이터 분류 (0) | 2022.07.18 |